Why we migrated CompileBench to Harbor, the AI agent eval framework?

Escaping the maintenance trap: how standardizing our AI evaluation harness with Harbor Framework improved reproducibility and collaboration.

production-ready through

Independent evaluation and training for the AI agent ecosystem. Real-world complexity through simulation environments where agents face multi-hour tasks.

Talk to FounderLarge-scale RL datasets with tuned difficulty distributions. Cheat-proof reward functions. Teach skills scarce in public data (e.g. dependency hell, distributed system debugging).

Measure quality and uncover blind spots. Pick optimal models, tune prompts in a fast-changing world. Benchmark against competitors. Win deals and deliver on performance promises.

Independent verification of what actually works. Design processes based on real capabilities, not marketing hype. ROI-driven deployment decisions. Move from FOMO to measurable P&L impact.

Explore our research on AI agents, benchmarking, and evaluation

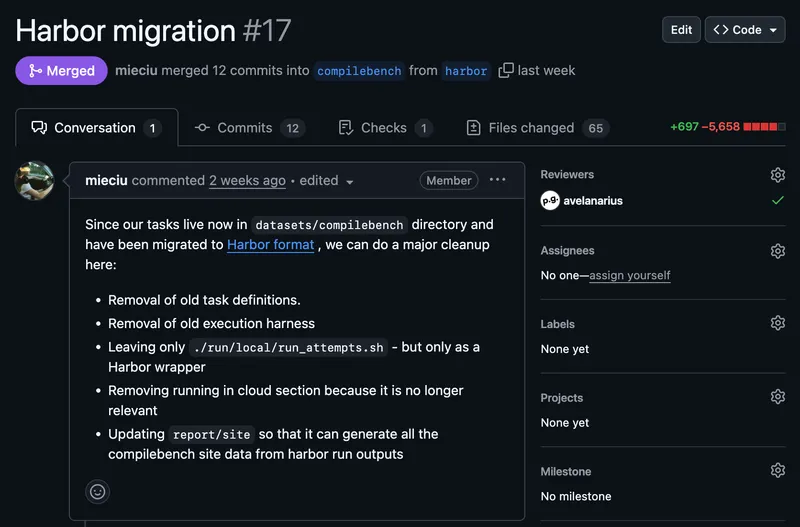

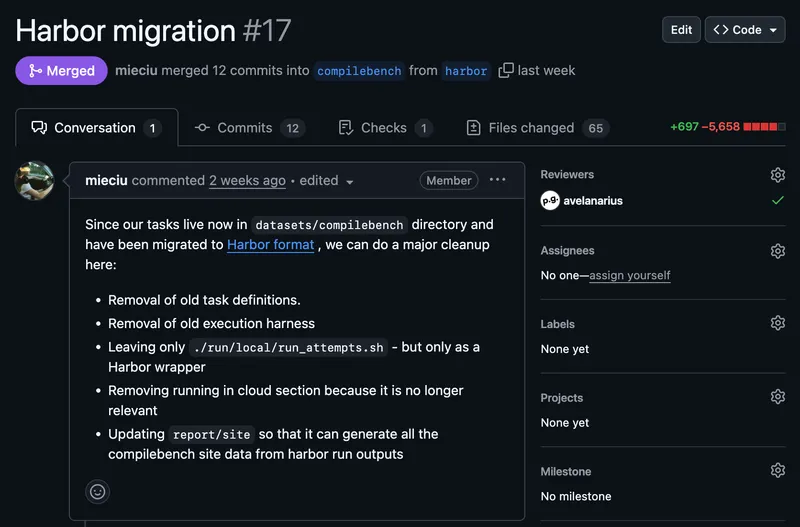

Escaping the maintenance trap: how standardizing our AI evaluation harness with Harbor Framework improved reproducibility and collaboration.

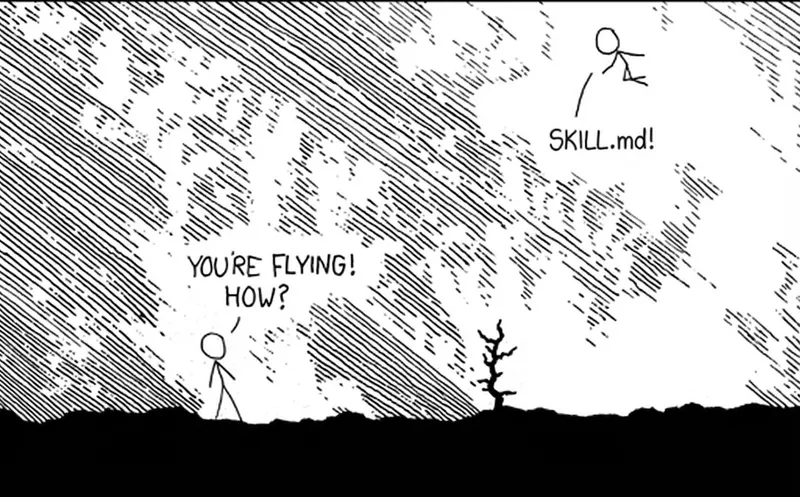

Comparing Google Antigravity and Claude Code for AI-assisted workflows, and why custom Claude Skills might be the better approach.

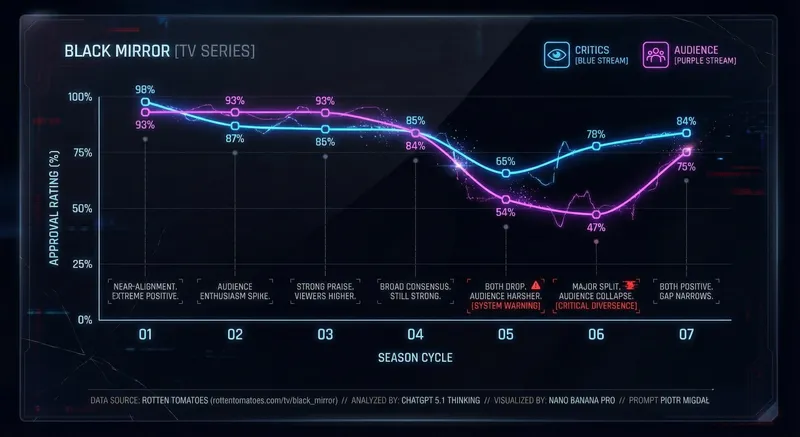

A reality check on AI enthusiasm: how a simple chart generated vitriolic reactions outside the tech bubble.

The Quesma database gateway IP has been acquired by Hydrolix to ensure continued support.

Read the announcement.