BinaryAudit

Security analysis benchmark for AI coding agents. Tests models on detecting backdoors, timebombs, and malicious code in compiled binaries using reverse engineering tools like Ghidra and radare2.

Open-source benchmarks for evaluating AI coding agents on real-world software engineering tasks.

Stay tuned for new benchmarks and results

Security analysis benchmark for AI coding agents. Tests models on detecting backdoors, timebombs, and malicious code in compiled binaries using reverse engineering tools like Ghidra and radare2.

OpenTelemetry instrumentation benchmark for AI coding agents. Tests models on real-world tasks adding distributed tracing, metrics, and logging to multi-language codebases.

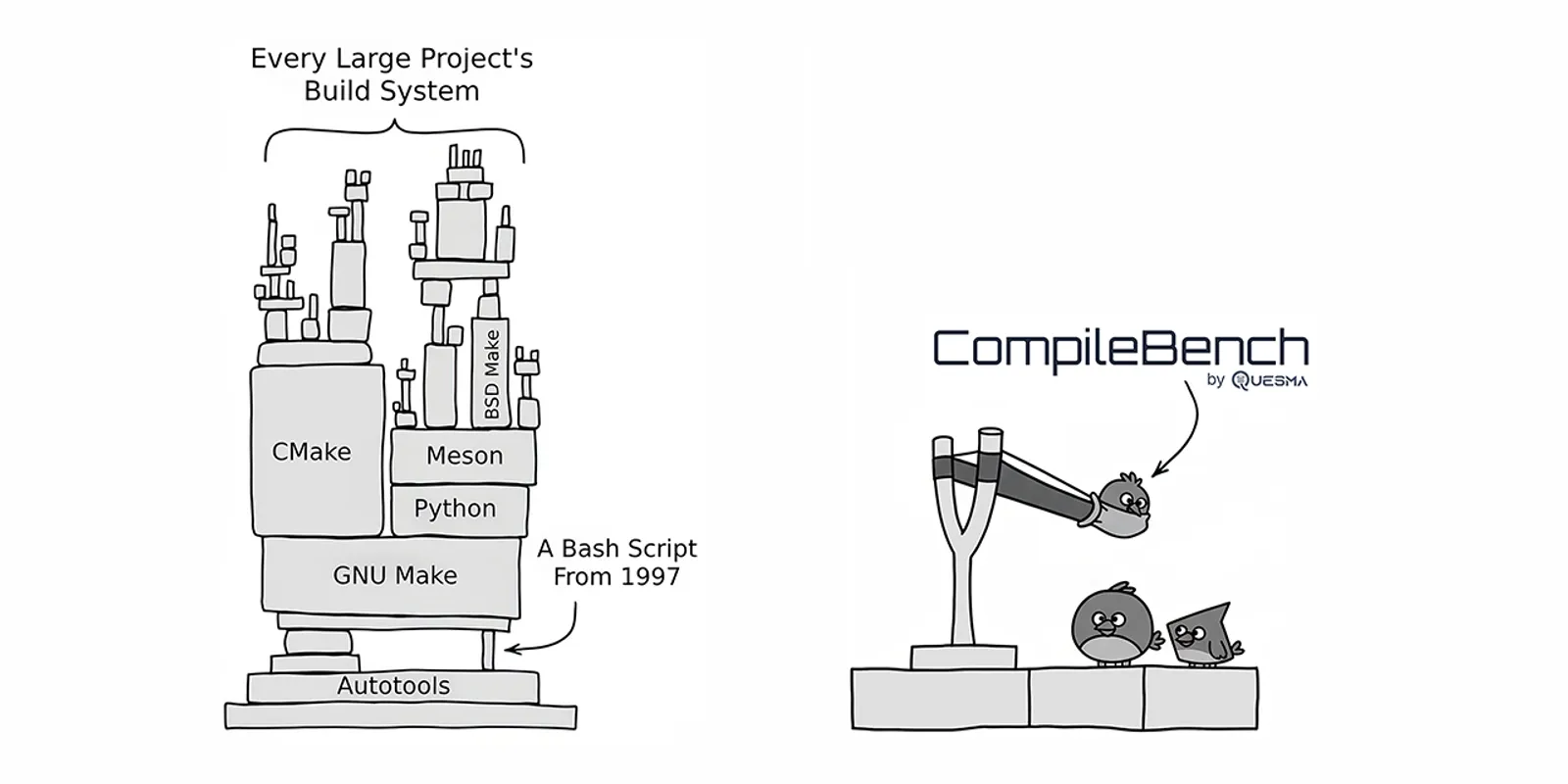

Build system benchmark for AI coding agents. Tests models on fixing compilation errors, updating dependencies, and navigating complex build configurations.